JustDone AI Detector: A Comprehensive Review

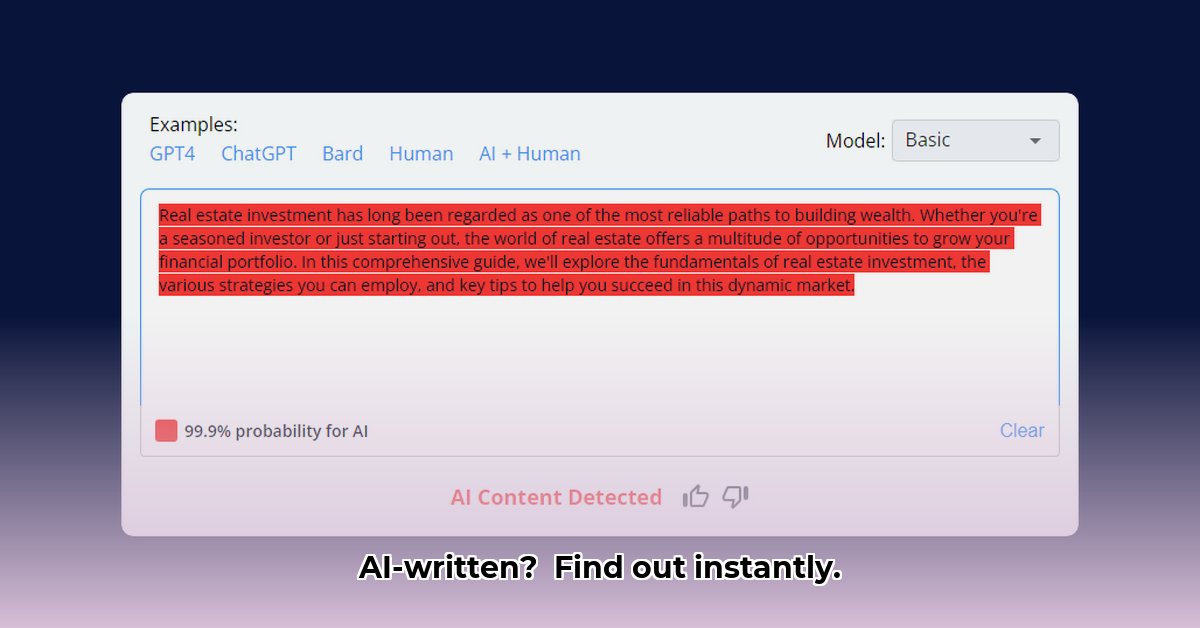

JustDone AI Detector aims to rapidly and accurately identify AI-generated text and plagiarism. This review analyzes its capabilities, limitations, and practical applications, providing insights for various user groups. While the tool shows promise, a lack of transparency regarding its methodology hinders definitive assessment of its true accuracy.

Accuracy: A Need for Transparency

JustDone claims impressive accuracy, citing extensive training data. However, the absence of independently verifiable data—precise figures on correctly identifying AI and human-written text—undermines these claims. This lack of concrete evidence is a significant shortcoming. Without independent verification, users must approach its accuracy claims with caution. How can we trust a tool's accuracy without independent confirmation?

Transparency: Understanding the "Black Box"

The tool's inner workings remain largely opaque. The algorithms and technical details, along with the size and type of data used for training, are undisclosed. This lack of transparency impedes independent evaluation and the identification of potential algorithmic biases. This obscurity raises concerns regarding the tool's reliability and robustness.

Target Users and Applications

JustDone AI Detector offers utility across diverse sectors:

- Students: A valuable tool for detecting plagiarism in assignments.

- Educators: Facilitates quick checks on submitted work, aiding in the identification of potentially AI-generated content.

- Researchers: Assists in verifying the originality of academic papers and publications.

- Content Creators: Provides a mechanism to ensure originality and identify potential instances of AI-generated content.

Its unlimited character limit and monthly subscription model are attractive for users needing frequent analysis of large text volumes.

Practical Strategies for Effective Utilization

The optimal approach to JustDone varies among stakeholders:

| Stakeholder | Short-Term Approach | Long-Term Strategy |

|---|---|---|

| Educational Institutions | Pilot testing alongside other detectors, integrating into existing workflows. | Developing clear AI content policies, conducting rigorous accuracy assessments, refining integration methods. |

| Researchers | Preliminary assessment with JustDone, corroborating findings with other AI detection methods. | Collaborative, independent evaluations; exploring advanced features; publishing findings and methodologies. |

| Content Creators | Utilizing JustDone for originality checks, adjusting writing styles based on feedback. | Integrating JustDone with other quality control methods, analyzing its ability to detect increasingly sophisticated AI writing. |

| JustDone Developers | Publishing detailed case studies demonstrating efficacy, providing complete information on algorithms and training data. | Developing advanced capabilities (e.g., stylistic analysis), seeking third-party verification, addressing potential biases. |

Limitations and Considerations: The Nuances of AI Detection

No AI detection tool is foolproof. JustDone, like others, is susceptible to false positives (labeling human-written text as AI-generated) and false negatives (missing AI-generated text). The rapidly evolving landscape of AI writing necessitates continuous updates to maintain effectiveness. Consequently, cross-referencing results with other detection tools is crucial. The tool's speed should not come at the cost of complete transparency.

How Accurate Is JustDone Compared to Competitors?

The accuracy of AI detection tools is a dynamic field. Many claim high accuracy, but comparing JustDone to competitors is hindered by a lack of standardized testing and publicly available benchmarks. The inherent complexity of AI detection, with its rapidly advancing technology and the need for constant adaptation of detection methods, makes direct comparisons challenging. While various tools, such as Originality.ai and GPTZero, report metrics, interpreting their validity requires a deep understanding of their testing methodologies.

Key Challenges in Comparing AI Detection Tools

- Constantly Evolving AI Writing: AI writing tools are continuously improving, making detection an ongoing challenge.

- Content Complexity: Simple text is easier to detect than sophisticated, nuanced writing.

- Training Data Differences: The effectiveness of a tool depends heavily on the type of AI-generated text it was trained on.

Actionable Intelligence: Practical Recommendations

- For Educators: Employ AI detection tools strategically, combining them with holistic assessment methods.

- For Researchers: Conduct independent evaluations of AI detection tools, publishing transparent methodologies and findings.

- For Content Creators: Recognize the limitations of AI detection, prioritizing the creation of high-quality, original content.

Key Takeaways:

- JustDone AI Detector offers a quick assessment of AI-generated content.

- Transparency regarding accuracy and methodology is essential for building user trust.

- No AI detection tool provides perfect results; cross-referencing is always recommended.

- Understanding the limitations and constantly evolving nature of AI detection is crucial.